Delphi-2M lifetime disease risk isn’t a verdict—it’s lead time: a generative model from EMBL-EBI, DKFZ and the University of Copenhagen that maps 1,000+ conditions across a lifetime.

If your medical record could talk, what story would it tell about the next 20 years of your health? Delphi-2M, a generative, transformer-based model developed by EMBL-EBI, DKFZ, and the University of Copenhagen, aims to answer this by mapping a person’s time-varying risk for over 1,000 diseases and estimating when they’re likely to appear.

Trained on population-scale records and validated across borders, Delphi-2M moves beyond one-off calculators to a probabilistic life-course risk map. The promise is precision prevention and more innovative health-system planning; the price of admission is doing the hard work on bias, consent, and governance so the tool helps patients without widening inequities.

The model is built on transformer architectures (the same general family behind modern large language models), but with a twist. Instead of words in a sentence, the AI treats a life as a sequence of coded “events”: diagnoses, lab signals, procedures, demographics, lifestyle factors, even death.

By learning how these tokens tend to follow one another, the system predicts future rates of disease over time rather than offering a single yes/no prediction. Think: weather forecast, not a crystal ball.

Why is this different?

Traditional tools excel at assessing one condition at a time, such as 10-year cardiovascular risk, a breast cancer risk score, and a diabetes calculator. Real life rarely plays along. People face multiple risks that interact: hypertension nudges kidney disease and heart failure; autoimmune conditions cluster; mental health and metabolic disorders often travel together.

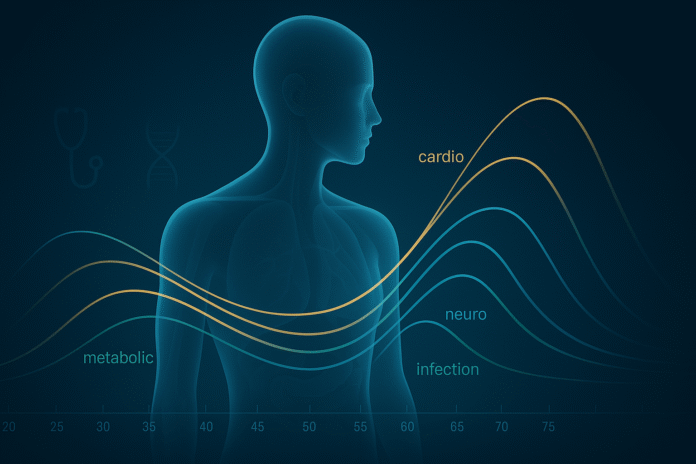

The new model simultaneously estimates risk trajectories for over a thousand ICD-coded diseases and updates those trajectories whenever something in your record changes. That’s crucial for modelling multimorbidity—how diseases co-occur, compete, or cascade.

Scale matters, too. The researchers trained on population-level health records that were large enough to capture both rare and familiar patterns, then validated their findings on an entirely different national dataset.

Performance carried over without retuning the parameters, suggesting that the model had learned generalisable health dynamics rather than memorising the quirks of a single hospital system. In short, it’s not just accurate on the training ground; it can also perform well in away games. And because it is a generative model, it can simulate thousands of plausible futures for a person with your history at your current age.

Those simulated “future patients” aren’t real people, but they let hospitals and policymakers experiment safely: What happens to expected oncology demand if smoking rates drop five points? How many dialysis stations will a city need in 2035 if obesity trends stay flat? Synthetic trajectories also offer a privacy-preserving approach for developing and evaluating new models without disclosing raw data.

Delphi-2M lifetime disease risk: what the numbers look like

Delphi-2M was trained on approximately 400,000 UK Biobank participants and tested without retuning on 1.9 million people in Denmark, modelling over 1,000 ICD-coded diseases simultaneously.

Instead of a single yes/no score, it produces age-specific risk trajectories that can stretch 10–20 years ahead, with stronger calibration at nearer horizons and for common chronic conditions.

In head-to-head comparisons, it often matches or surpasses single-disease calculators while providing the crucial context of multimorbidity, how conditions cluster and interact over time.

In other words: big data, broad coverage, and forecasts you can act on, not fear.

- Coverage: Risk and approximate timing for 1,000+ diseases, from chronic conditions like type 2 diabetes and heart failure to certain cancers and severe infections.

- Time horizon: Meaningful forecasts up to two decades, with better calibration at nearer horizons.

- Validation at scale: Trained on hundreds of thousands of people; validated on well over a million in a separate health system with different coding and care patterns.

- Comparative strength: Frequently on par with or stronger than established single-disease tools, while offering the added value of cross-disease context.

Numbers aside, the practical shift is simple: this isn’t a one-off risk printout; it’s a living risk map. The model can recalculate trajectories when a person quits smoking, starts a statin, develops hypertension, or gets a new diagnosis. Clinically, that supports earlier screening and targeted prevention—the proper test, at the right time, for the right person.

What this unlocks

If prediction is the map, action is the journey. By translating medical histories into age-specific risk trajectories, Delphi-2M gives different actors leverage at various moments: clinicians get earlier signals to tailor screening and prevention; hospitals can forecast service demand years in advance; public-health teams can test “what-if” policies before spending a cent; and researchers gain privacy-preserving synthetic cohorts to explore disease pathways without moving raw records.

The value isn’t in a single score; it’s in coordinating thousands of small, earlier decisions that bend outcomes toward fewer crises and fairer care.

For patients and clinicians

An “early warning” layer for primary care. Instead of screening everyone identically at the same age, practices could prioritise the subset of patients whose near-term risk is spiking, nudging them into earlier colonoscopy, low-dose CT for lung cancer, calcium scoring for silent coronary disease, or tighter metabolic monitoring. Crucially, the output is probabilistic, not deterministic: a 7% ten-year risk of heart failure is a reason to act, not a verdict.

For hospitals

Service planning with fewer blind spots. Suppose the model projects a steep rise in multimorbidity for 55- to 64-year-olds over the next decade. In that case, it becomes easier to justify the introduction of new community heart-failure nurses, expanding rehabilitation capacity, or procuring imaging equipment before bottlenecks form.

For public health

Policy can move upstream. City health departments and insurers can test “what-if” scenarios—more aggressive smoking cessation, new GLP-1 access rules, and expanded hypertension programs—and observe the simulated downstream effects on admissions, speciality capacity, and costs.

For research and AI safety

Synthetic, model-generated trajectories create a sandbox for training and evaluating other tools without exposing raw patient data. Done right, that’s a path to privacy-first innovation.

The hard questions we must face

Ambition without guardrails is how trust gets broken. Three issues need relentless attention:

Bias and representativeness

Many large biobanks and hospital datasets tend to skew toward specific ancestries, geographies, and socioeconomic groups. If the model mostly learns from healthier, wealthier, or majority-ancestry participants, its risk estimates may be miscalibrated for underrepresented communities.

That can push resources toward where the model is “confident,” not where the need is greatest. The fix isn’t magical: diversify training data, report subgroup performance, and require local validation before clinical use.

Coverage and fairness

Across diseases, Chronic, slowly progressing conditions are easier to predict than volatile events like infections, injuries, or some mental-health crises. If we only deploy the model where it shines, we risk reinforcing a clinical culture that prioritises what’s predictable over what’s impactful.

Governance should demand transparency about where the model underperforms, and ensure that adoption doesn’t penalise areas of care that remain noisy and difficult.

Consent, privacy, and misuse

Large predictive models require substantial data, and patient trust relies on transparent consent, data minimisation, and auditable use. There must be bright lines for insurers and employers: no back-door risk scoring to hike premiums or screen job candidates.

Within care settings, every prediction should leave a trace of who viewed it, how it influenced decisions, and what outcome followed—so post-market surveillance can detect drift or harm.

There’s also a communication challenge. Risk must be framed as a tool for agency, not a silent anxiety machine. Clinicians will need training and patient-facing materials that translate statistics into understandable action: what this number means, what can be done to lower it, and how we’ll track progress together. Without that, even calibrated forecasts can backfire into fatalism.

How it works (minus the hype)

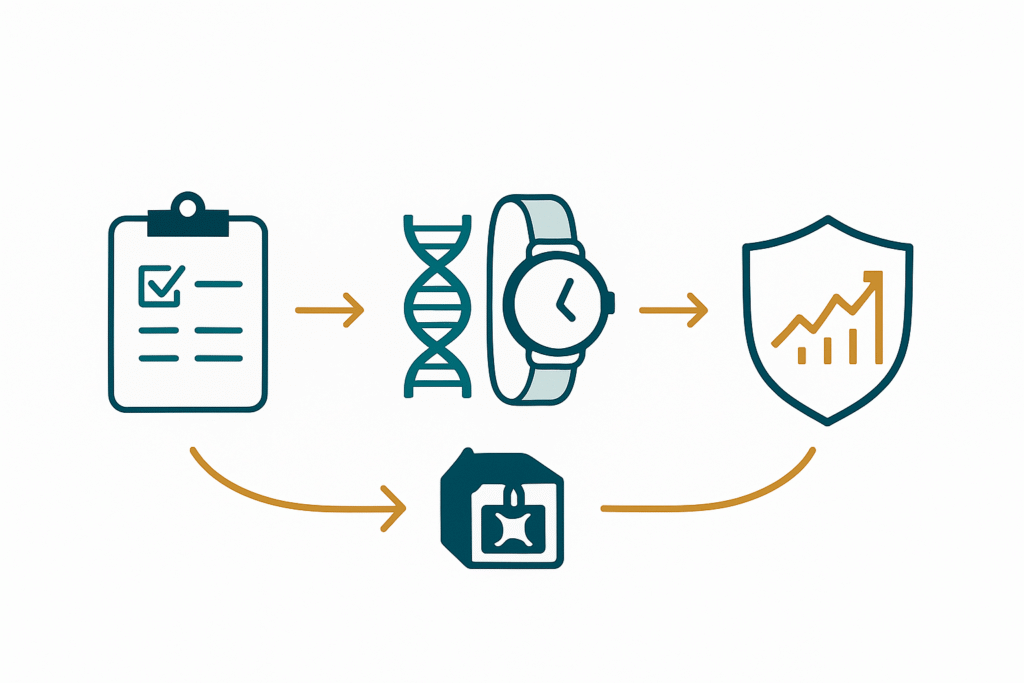

Under the hood, Delphi-2M consumes a longitudinal stream of events encoded into a standard vocabulary (think: ICD codes, procedure codes, demographic tags, a handful of lifestyle markers).

It learns the conditional probabilities of “what comes next” given your past and present. Because life is messy, it generates distributions over the following events and their timing. Shorter-range predictions are more accurate; predicting rare conditions and sudden exogenous shocks remains challenging. That humility—embracing uncertainty rather than trying to smooth it away—is a feature, not a bug.

Importantly, the system is not doing causal inference. It doesn’t “know” that salt raises blood pressure; it observes that specific event patterns tend to precede others. That’s fine for forecasting—and dangerous if mistaken for a mechanism. Guardrails should clearly indicate this in user interfaces and training materials.

What’s next

Prospective pilots in primary care

The real test isn’t leaderboard metrics; it’s whether the model changes outcomes. Expect trials that randomise clinics to receive AI-guided risk lists with outreach playbooks—earlier screenings, pharmacist consults, tailored lifestyle support—and measure earlier diagnosis, reduced admissions, or improved adherence.

Richer inputs beyond claims and diagnoses

The research roadmap suggests integrating electronic health records with genomics, proteomics, routine blood tests, imaging, and wearable devices to enhance patient care and outcomes. That could sharpen predictions in groups that one-size-fits-all risk engines have historically underserved.

Standards and regulations

Health systems should demand explainability, documented re-validation on local populations, and continuous post-deployment monitoring. Procurement checklists should cover training data lineage, subgroup metrics, update policies, and a clear “kill switch” in case of drift or harm.

Public-facing transparency

Citizens should be informed about when and how predictive models are used in their care, have straightforward opt-out options where feasible, and receive annual public reports summarising performance, errors, and improvements.

The bottom line

Delphi-2M doesn’t hand out destinies; it hands out lead time. Delphi-2M generates probabilistic risk maps across over 1,000 conditions, enabling us to move care upstream from late rescue to early prevention.

The science is strong; the responsibility is stronger. Calibrate locally, disclose limits, protect consent, and measure real outcomes—not just AUCs. If we pair this model with diverse data, clear guardrails, and plain-English risk communication, we can turn prediction into prevention and planning into preparedness.

That’s not techno-mysticism; it’s good medicine—delivered earlier, fairer, and with the humility to say “this is likely, not certain” Predictive power is incredible. Preventive power is the point.