Sora 2 rocketed up the App Store, turning AI video into a mainstream toy. But popularity brings problems. This update looks squarely at the Sora 2 challenges making headlines now: copyright disputes, creator consent, provenance limits, copycat scams, and regional access.

According to The Verge, the app passed 1 million downloads in under five days, despite being invite-only and limited to the U.S. and Canada at launch. Popularity is real—so are the risks. The Verge

The Big Challenges OpenAI Must Solve

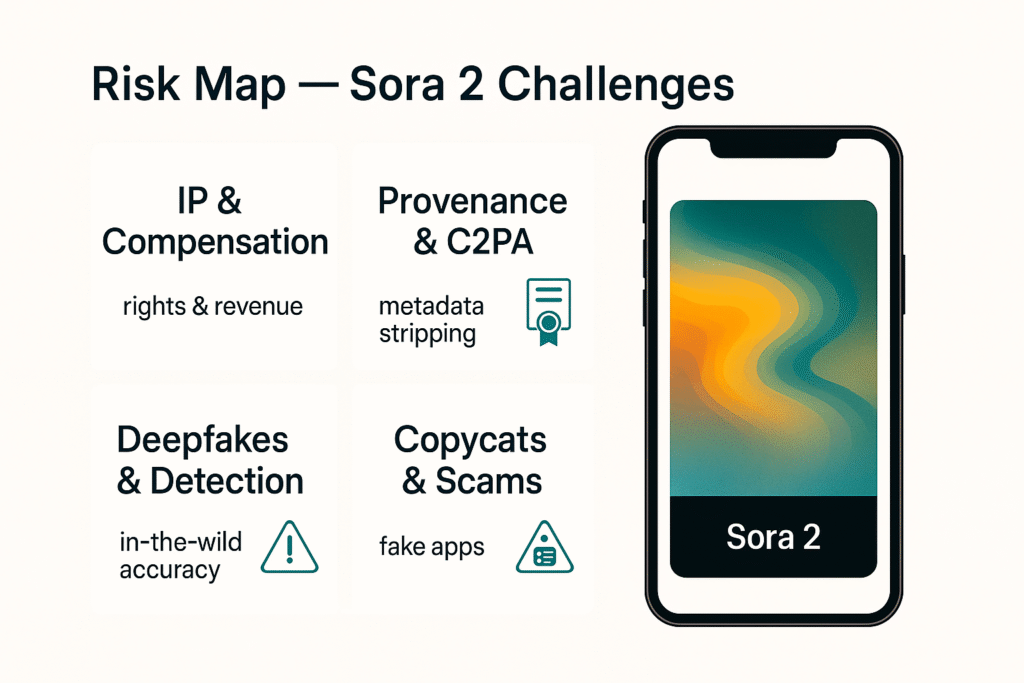

The Sora 2 challenges cluster around six pressure points: creator rights and pay, provenance, realism misuse, copycats, regional access, and moderation. Agencies and studios are pushing for explicit consent, credit, and compensation when styles or likenesses are invoked.

Provenance helps—Sora 2 adds visible watermarks and C2PA—but many platforms still strip metadata or crop visuals, weakening trust signals. Higher realism (physics plus synced audio) raises deepfake risk, while detectors often underperform on “in-the-wild” uploads.

Copycat apps and domains can muddy the brand and expose users to scams, making official links and store enforcement crucial. With rollout limited to the U.S. and Canada for now, access lags in other regions (including Africa) amid evolving policy requirements.

Finally, a viral short-video feed plus generative content creates a heavy moderation load, where false blocks and misses are inevitable at scale.

1) Copyright, credit, and compensation

Hollywood’s alarm bells are loud. Creative Artists Agency (CAA) warned that Sora “poses significant risks to creator rights,” urging protections for clients’ work and more explicit compensation rules. The Hollywood Reporter and Variety also documented pushback from major agencies over unlicensed use and “exploitation” concerns. These disputes sit at the centre of Sora 2’s challenges for professional content.

Why this matters:

- Sora can remix styles and characters that may resemble copyrighted IP.

- Rights holders want consent, credit, and revenue share—not vague promises.

2) Provenance is necessary—but not sufficient

OpenAI says every Sora video ships with a visible watermark and C2PA (Content Credentials) metadata to prove origin and edits. That’s a strong baseline. However, independent analyses highlight significant limitations: metadata can be stripped by platforms that fail to preserve it (even basic screenshots remove it), and watermarks can be cropped or degraded.

These provenance friction points are core Sora 2 challenges for trust at internet scale.

What the research says (high level):

- Watermarking alone is not a panacea against abuse or deepfakes.

- Academic work shows invisible watermarks can be removed or weakened by regeneration attacks or edits.

- C2PA’s effectiveness depends on platform adoption; stripping remains common across social channels and publishing workflows.

3) Deepfakes and detection pressure

Stronger physics and synced audio boost realism—but they also raise the risk of misuse. Studies find modern detectors struggle with “in-the-wild” deepfakes, with sharp accuracy drops versus lab benchmarks. That makes provenance more critical, yet, as above, provenance signals can be lost in transit. Managing this tension is one of the toughest Sora 2 challenges for platforms and policymakers.

4) Cameos, likenesses, and consent

Sora’s cameos feature (opt-in identity insertion) introduces a clean consent model on paper, with reversible permissions. The challenge lies in real-world enforcement: once a likeness leaks off-platform without proper credentials, the burden shifts to both victims and platforms. Maintaining meaningful consent across re-uploads and edits will require stronger guardrails and cross-platform agreements. (OpenAI outlines consent features and policy baselines in its launch notes and system card.)

5) Copycat apps and user confusion

TechCrunch reported Sora copycats flooding the App Store, riding on the brand name and confusing users. For creators and casual users, this increases the risks of malware, scams, and paid “Sora” look-alikes. Clear branding, official links, and platform enforcement are now part of the Sora 2 challenges playbook.

6) Regional limits, policy headwinds, and platform rules

For now, Sora is available only in the U.S. and Canada only, which slows legitimate adoption across Africa and other regions while copycat markets spring up. As global rollout starts, OpenAI will face AI Act–style compliance questions in Europe, stricter disclosure rules for political content, and evolving app-store policies around deepfake risks. Early coverage captured the invite-only scope and hints of policy tightening as complaints mounted.

7) Content moderation at scale

A viral feed combined with generative video creates a huge moderation load, including copyrighted characters, harmful stereotypes, political misinformation, and NSFW attempts. OpenAI says it blocks certain likeness uses and applies layered filters, but type-I/II errors (false blocks and misses) are inevitable at launch scale. System cards and policy pages outline the approach; the operational reality is still unfolding. OpenAI

Key data point to track

- Adoption: 1M+ downloads in <5 days (iOS), invite-only and U.S./Canada-limited. That growth increases the urgency of solving the Sora 2 challenges above.

Practical steps for creators and editors (Africa-first lens)

- Keep credentials intact. Export with C2PA on; avoid post-processing that strips metadata. If platforms remove credentials, host the original on a site you control and link back.

- Avoid IP minefields. Avoid mimicking protected characters, logos, or soundtracks without obtaining the necessary licenses; ensure you have track attribution and sync licenses for music.

- Verify the app. Use official OpenAI links; avoid look-alike apps or “pro” upsells not tied to OpenAI.

- Design consent flows. If you use Cameos, log permissions and provide a clear takedown path in your captions and site policy.

Bottom line

Sora 2 shows how fast AI video is moving—and how fast the risks grow. Copyright fights, provenance gaps, and moderation stress are not side quests; they’re the main game. If OpenAI and platforms can reinforce consent, credentials, and compensation—and if the ecosystem really adopts C2PA end-to-end—Sora could become a sustainable tool for creators worldwide. Until then, treat these Sora 2 challenges as operating assumptions, not footnotes.

Takeaways

- Copyright and creator-rights disputes are escalating (CAA and others).

- Provenance helps, but metadata can be stripped; watermarks can be cropped or weakened. Adoption across platforms is the bottleneck.

- Detection is fragile on “in-the-wild” deepfakes—another reason to keep Content Credentials intact.

- Copycat apps are confusing users; stick to official channels.