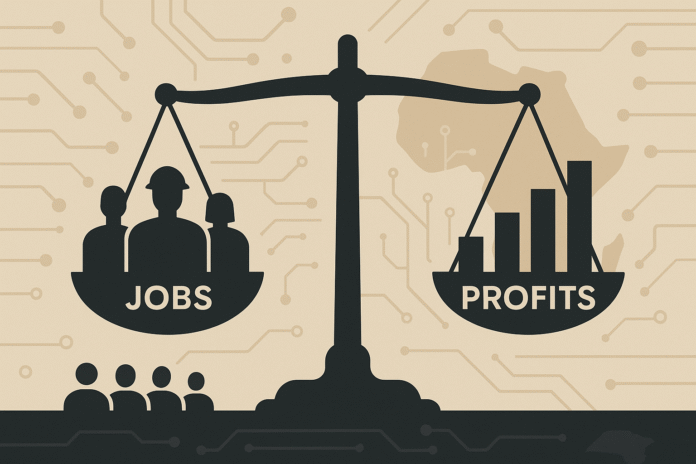

Geoffrey Hinton—the deep-learning pioneer and 2024 Nobel Prize laureate in physics—has issued another alarm: AI will create “massive unemployment” while driving a “huge rise in profits,” worsening inequality unless we change the rules of the game.

He suggests the main impact will fall on routine, task-heavy jobs; productivity gains in areas like healthcare could be substantial. Financial Times

The Gist

- Who said what: In a new Financial Times interview, Hinton argues the richest actors will use AI to replace workers, accelerating profits at the top. Financial Times

- Who’s most exposed: Roles built on repeatable processes—clerical ops, back-office support, fundamental analysis, standard reporting—face the sharpest automation pressure, while expert fields (notably healthcare) may see productivity windfalls. The Times of India

- Disagreement on safety nets: He pushes back on universal basic income (UBI) as a complete solution, challenging the Sam Altman–style “cash floor” narrative. (Altman himself has lately shifted toward “universal basic wealth”) The Times of India, Yahoo Finance

What Hinton actually said

Hinton’s line is blunt: AI will “make a few people much richer and most people poorer,” with the mechanism being the replacement of routine labour at scale. He frames the dynamic as downstream of capitalism’s incentives, not a moral failing of the tech itself. Financial Times

He’s also still on the record estimating a 10–20% risk that advanced AI goes catastrophically wrong—an existential tail risk he’s repeated in recent months. That’s not a prediction; it’s a probability big enough that serious safety work is non-optional. CBS NewsThe Guardian

Context check: Hinton is not a fringe voice. He shared the 2024 Nobel Prize in Physics for foundational work that enabled modern neural networks. When he talks about where those networks are headed, we should treat it as expert testimony. NobelPrize.org

Does the data show mass layoffs yet?

Not in the aggregate—so far. New surveys from the New York Fed indicate that few direct AI-driven layoffs are occurring today; instead, many firms are retraining their staff. But employers do expect more restructuring as adoption deepens.

Takeaway: short-run impact is modest; medium-run pressure is coming. Reuters Barron’s Liberty Street Economics

FanalMag take: Inequality is a policy choice, not a law of physics

Hinton’s forecast is a trajectory, not fate. If we keep current incentives, AI’s gains pool at the top. Change the incentives, and the curve bends.

What to change (pragmatic levers leaders can pull now):

- Wage insurance + transition accounts: Short-term income top-ups for displaced workers paired with funded re-skilling paths (not just “courses,” but paid time + job guarantees).

- An “AI dividend” on windfall profits to finance training, local innovation grants, and public digital infrastructure (compute, connectivity, data tooling).

- Data trusts & worker models: Treat workplace data as a shared asset; share model-driven gains with the people whose workflows trained them.

- Anti-monopoly guardrails: Cap vertical lock-in (models, cloud, and apps) that traps value on a few platforms.

None of this is science fiction. It’s just industrial policy with a 2025 brain.

Who’s most exposed vs. most complemented

- High exposure (near-term): Contact centres; routine back-office ops; claims processing; basic bookkeeping/reporting; entry-level programming that’s mostly glue code; content farms.

- Medium exposure: Mid-level analyst work where judgment is learnable and audits are possible.

- Complemented (if we’re smart): Clinicians, educators, field technicians—any role where AI augments a scarce expert while humans retain responsibility. Hinton specifically notes the potential efficiency gains in healthcare. The Times of India

The Africa angle (our house view)

Africa can avoid the worst version of Hinton’s future by designing for complementarity from the outset.

- Local-language AI as an employment multiplier: Fund open small/medium models that understand Hausa, Yoruba, Igbo, Amharic, Swahili, etc., so teachers, nurses, and civil servants get superpowers in their own languages—not generic bots that centralise value offshore.

- Compute & connectivity as public goods: Solar-backed micro-data centres + broadband as infrastructure, so startups can fine-tune locally rather than rent all brains from abroad.

- Skills pipelines: National “AI Technician” certificates for operations, prompt/safety engineering, evaluation, and applied data stewardship—fast to earn, tied to real jobs.

- Procurement with teeth: Government and telco/fintech procurement that requires vendors to: (a) publish job-transition plans, (b) fund re-skilling, and (c) pay a local AI-dividend.

If you run a company, consider doing this within the next 90 days.

- Task-level inventory: Map your workflows to tasks, not jobs; tag tasks by automate/assist/retain.

- Put humans in charge of writing: Draft a policy on accountability, escalation, and human override for AI-mediated decisions.

- Retraining Pledge: Commit to re-skilling every impacted employee at company expense and publish the numbers.

- Profit-sharing mechanism: Route a fixed % of AI-efficiency gains into worker bonuses or equity.

- Model governance board: Security, legal, ops, and worker reps reviewing deployments monthly.

- Vendor clauses: Require evals, bias reporting, and transition funding in every AI software contract.

Bottom line

Hinton’s warning isn’t doom; it’s a fork in the road. Left alone, AI’s default setting is profit concentration and erosion of routine jobs. With deliberate rules, we can foster broad-based productivity and deliver better services without overburdening people. The technology is neutral. The outcomes are not. Financial Times

Sources & further reading

- Hinton’s latest remarks and framing in the Financial Times, as well as round-ups across Indian outlets. Financial TimesThe Times of India+1The Indian Express

- Labour-market data on AI adoption/layoffs from the New York Fed (summaries via Reuters and Barron’s). Liberty Street EconomicsReutersBarron’s

- Hinton’s prior risk estimates and context. CBS NewsThe Guardian

- Nobel background. NobelPrize.org