Large Language Models (LLMs) like GPT-4 and Claude 3 have captured headlines for their intelligence and scale.

However, a quiet revolution is underway in AI — one that’s smaller, faster, and potentially more impactful, especially in resource-constrained environments like Africa.

Small Language Models (SLMs) are emerging as the new frontier in AI — lightweight, efficient, and powerful enough to run on laptops, phones, or even Raspberry Pi devices.

SLMs are transforming the AI landscape by introducing powerful models to low-resource environments, particularly in emerging markets.

What Are Language Models?

Before diving into SLMs and LLMs, let’s clarify what a language model is.

A language model is like a super-smart librarian who can predict the next word in a sentence, generate text, or answer questions based on patterns learned from vast training data.

These models are trained on text datasets, enabling them to comprehend and generate human-like language.

For example, when you ask a chatbot, “What’s the weather like?” it uses a language model to interpret your question and generate a response.

Language models vary in size, complexity, and purpose. The two main categories are

- Large Language Models (LLMs): Massive models with billions or trillions of parameters (think of parameters as the “knowledge knobs” that determine how the model processes data). Examples include OpenAI’s GPT-4 and Google’s BERT.

- Small Language Models (SLMs): Compact models with millions to a few billion parameters designed for efficiency and specific tasks. Examples include Microsoft’s Phi-3 and Google’s Gemma.

Large Language Models (LLMs): The Heavyweights of AI

LLMs are the giants of the AI world. They’re trained on enormous datasets, sometimes petabytes of text, and have hundreds of billions of parameters.

For instance, GPT-4 is rumoured to have 1.8 trillion parameters, requiring 7.2 terabytes of storage for its values alone!

LLMs are compelling and capable of handling complex tasks like:

- Generating human-like text for articles or stories.

- Translating languages with high accuracy.

- Summarising long documents or answering broad questions.

Strengths of LLMs

Large Language Models (LLMs) like GPT-4 and Gemini have become the backbone of advanced AI applications.

Their massive scale allows them to handle a wide range of tasks—from writing and translation to coding and research—with impressive fluency and depth.

These models are built to be generalists, capable of understanding and generating human-like responses across countless domains.

- Versatility: LLMs excel at general-purpose tasks. They can handle everything from writing code to creating poetry, making them ideal for applications like ChatGPT or advanced research tools.

- Deep Understanding: With their massive parameter count, LLMs capture intricate language patterns and long-range dependencies, leading to nuanced and contextually rich responses.

- Transfer Learning: LLMs can adapt to new tasks with minimal additional training, thanks to their broad knowledge base.

Challenges of LLMs

But with great power comes great complexity. The same scale that gives LLMs their intelligence also makes them costly, energy-intensive, and difficult to deploy on everyday devices.

Their dependence on cloud infrastructure raises concerns about latency, privacy, and accessibility, especially in regions with limited internet connectivity or computational resources.

Resource Intensive

Training and running large language models (LLMs) require enormous computational power, often costing millions of dollars. For example, training Google’s Gemini 1.0 Ultra reportedly cost $191 million.

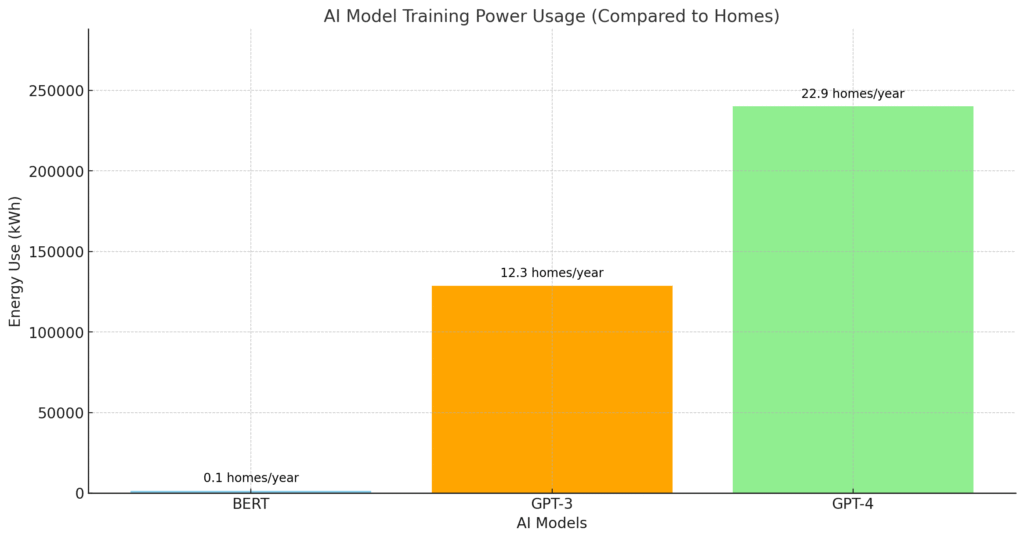

Energy Hogs

Large Language Models (LLMs) consume a significant amount of energy, raising environmental concerns. Their complex architecture demands powerful GPU clusters, making them impractical for smaller organisations.

LLMs don’t just cost money — they also cost power.

The energy required to train a high-end LLM can consume as much electricity as a small town over several days.

For example, training a model like BERT on a GPU cluster might use around 1,500 kWh, enough to power an average U.S. household for over four months.

Not Edge-Friendly

Due to their size, LLMs typically run on cloud servers rather than on devices like smartphones or IoT sensors, which leads to latency (delays) and privacy concerns when data is sent to the cloud.

What Are Small Language Models?

SLMs are simply compact versions of their larger counterparts – the LLMs. Instead of hundreds of billions of parameters, SLMs operate with 1B–7B parameters, making them dramatically lighter in both compute and memory usage.

For example, Microsoft’s Phi-3-mini has 3.8 billion parameters and can run on a smartphone, occupying just 1.8GB of memory when optimised.

SLMs are gaining popularity, especially for Edge AI, where AI processing happens directly on devices.

Examples of SLMs:

- Phi-3 Mini (Microsoft)

- Gemma 2B (Google)

- Mistral 7B

- TinyLlama

- OpenChat 3.5

Despite their small size, these models can still perform remarkably well in tasks such as reasoning, summarisation, translation, and more.

Strengths of SLMs

Efficiency and Speed

SLMs require less computational power and memory, which makes the processing times faster. This speed is very crucial for real-time applications, such as voice assistants or chatbots on mobile devices.

Cost-Effective

SLMs are cheaper to train and deploy, making them accessible to startups, small businesses, and organisations with tight budgets.

Edge Compatibility

SLMs can run on resource-constrained devices, such as smartphones, wearables or IoT sensors, thereby reducing reliance on cloud servers.

Enhanced Privacy

By processing data locally, SLMs minimise the need to send sensitive information to the cloud, making them ideal for special industries like finance or healthcare.

Customizability

SLMs can be fine-tuned for specific tasks, sectors, or industries (e.g., medical chatbots or legal document analysis) using smaller, high-quality datasets, often outperforming LLMs in niche applications.

Challenges of SLMs

Limited Capabilities

SLMs have a narrower knowledge base due to their smaller size and limited training data, which can result in less accurate or less nuanced responses for broad or complex queries.

Reduced Generalisation

Unlike LLMs, SLMs may require more fine-tuning to adapt to new tasks, as they rely less on broad transfer learning.

Performance Trade-Offs

For tasks requiring deep reasoning or extensive world knowledge, SLMs may underperform compared to LLMs.

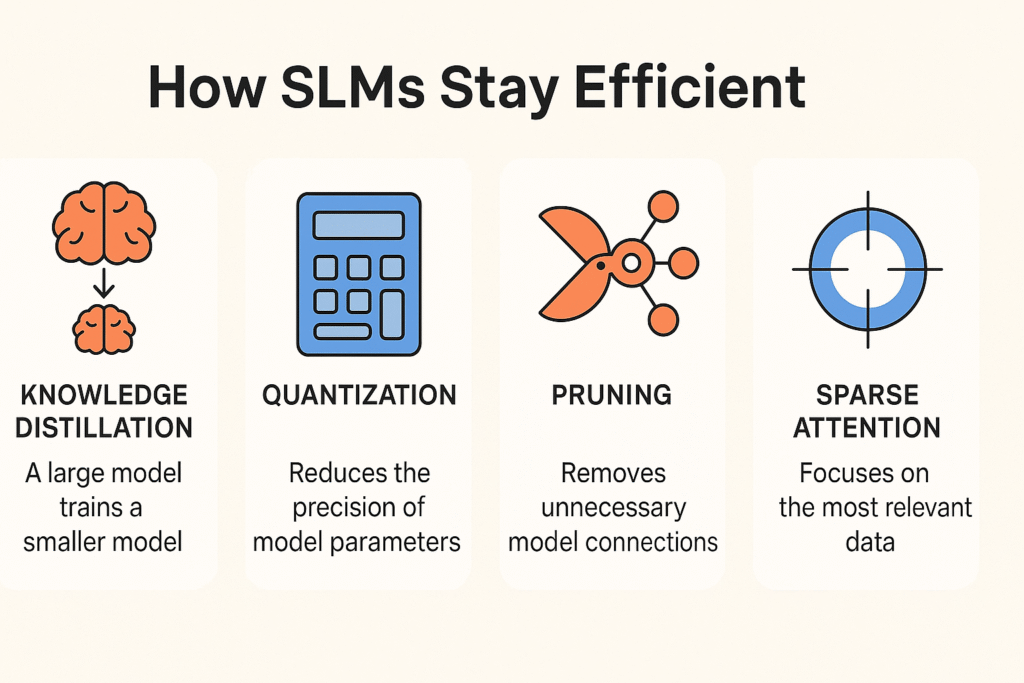

How are SLMs Made So efficiently?

SLMs achieve their compact size and efficiency through advanced techniques:

Knowledge Distillation

A large model (the “teacher”) trains a smaller model (the “student”) to mimic its performance using high-quality data.

This allows SLMs to retain much of the teacher’s capability in a smaller package.

Quantisation

This reduces the precision of a model’s parameters (e.g., from 32-bit to 8-bit numbers), thereby shrinking its size and speeding up processing without significant loss of accuracy.

Pruning

Unnecessary connections in the model’s neural network are removed, making it leaner and faster. This is inspired by how the human brain optimises neural connections.

Sparse Attention

SLMs focus only on the most relevant parts of the input data, reducing computational needs compared to LLMs, which analyse all relationships between words.

These techniques make SLMs ideal for resource-constrained environments while maintaining strong performance for specific tasks.

Why the Industry Is Shifting Toward SLMs

Big Tech is waking up to the downsides of huge models:

- High inference cost

- Massive carbon footprints

- Limited accessibility outside the cloud

SLMs solve this:

- ✅ Lower cost to deploy

- ✅ Real-time performance

- ✅ Better privacy (on-device)

- ✅ Scalable to billions of devices globally

Use Cases: Where SLMs Shine

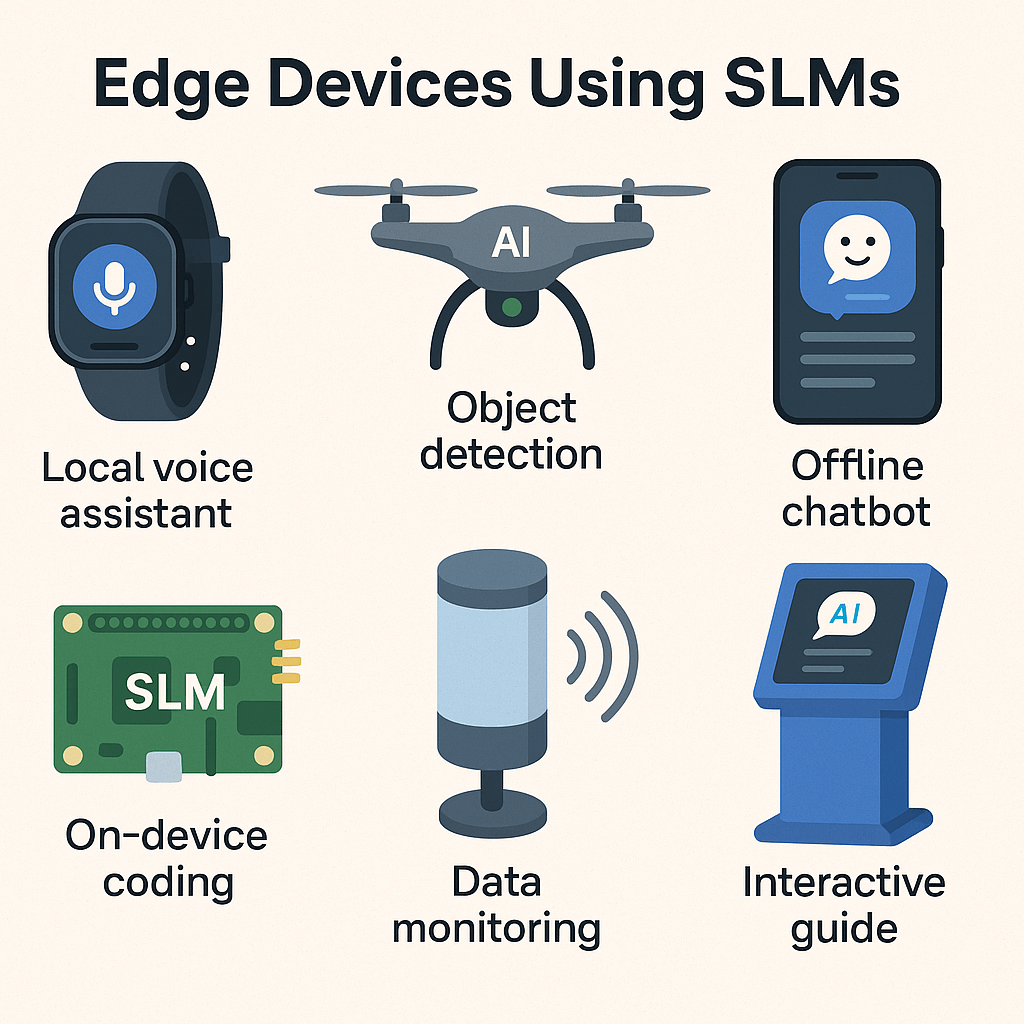

SLMs are transforming industries by enabling AI on edge devices. Here are some examples:

Healthcare: SLM powers on-device chatbots that answer patient queries or assist doctors with clinical notes, keeping sensitive data local.

Logistics: Real-time shipment tracking and optimisation on edge devices help companies identify inefficiencies without relying on the cloud.

Smart Devices: SLMs enable voice assistants on smartphones or smart speakers to respond quickly, even when offline, thereby enhancing the user experience.

Retail: SLMs analyse customer feedback or reviews on edge devices for real-time sentiment analysis, improving brand perception.

Manufacturing: Sensors with SLM detect defects on the factory floor, providing instant feedback without cloud delays.

SLMs and Edge AI: A Perfect Match

Edge AI refers to AI computations done locally without relying on cloud servers or the internet. SLMs are perfectly suited to edge environments where power, memory, and connectivity are limited.

Here’s where SLMs shine at the edge

Real-world examples:

- A local chatbot in a clinic with no Wi-Fi

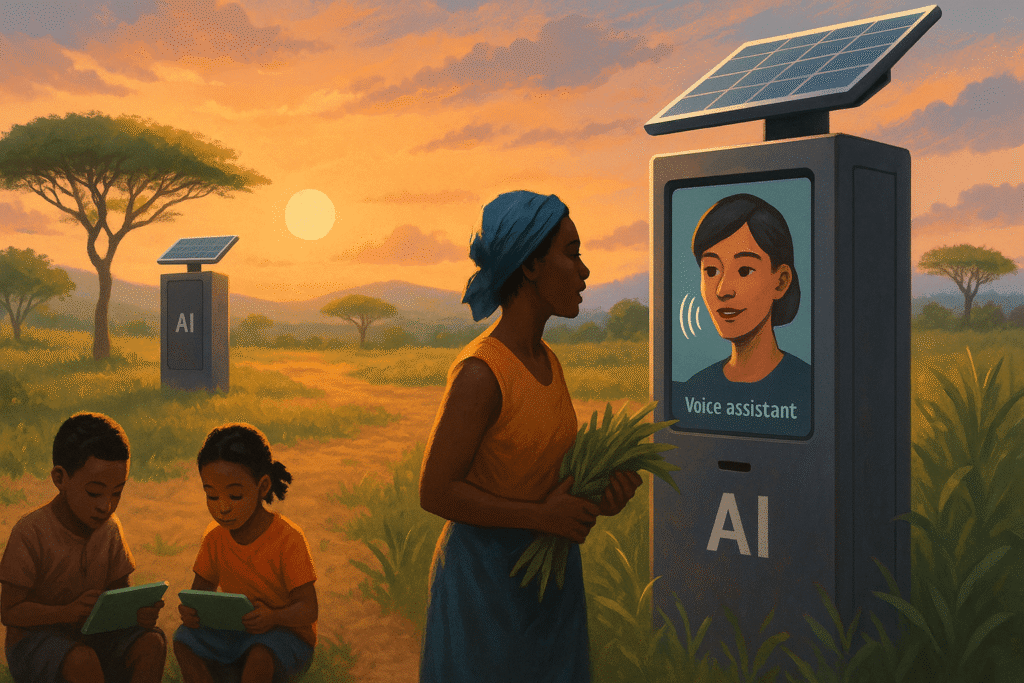

- Voice assistant for farmers in rural Kenya

- Offline educational tutor on low-end Android devices

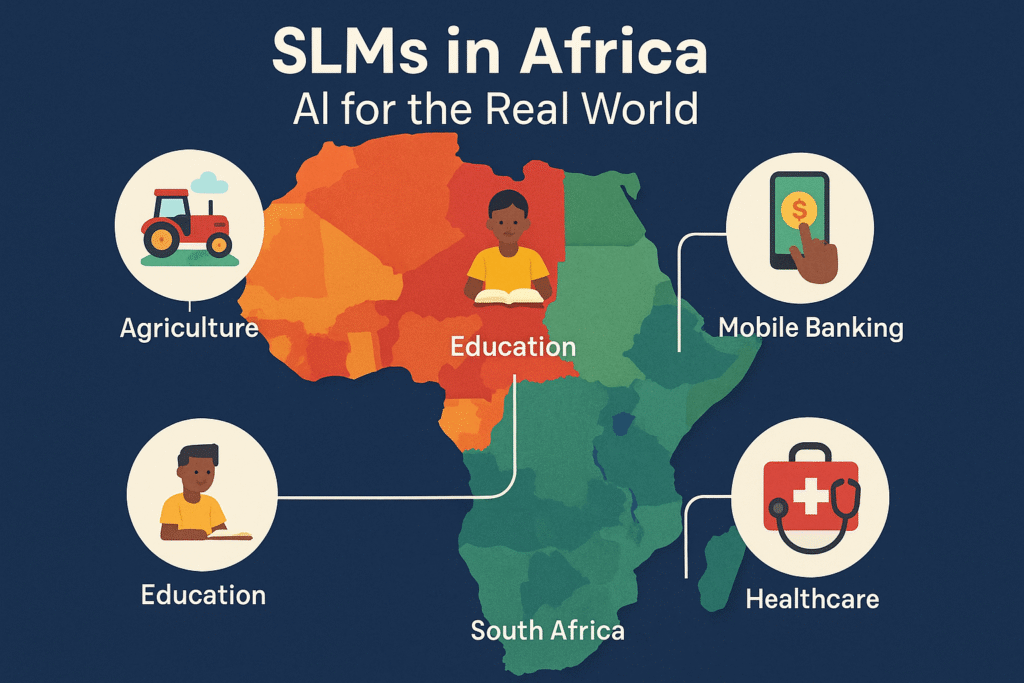

Why This Matters in Africa

Africa is mobile-first. Most people access the internet through smartphones with modest specs.

SLMs aren’t just theoretical in Africa—they’re practical tools today

SLMs could power:

- AI-powered SMS bots in local languages

- Mobile banking and financial literacy tools

- Education apps that don’t require the internet

SLMs are not just a nice-to-have for Africa — they are necessary.

Performance: Can SLMs Compete with LLMs?

While LLMs still outperform SLMs in complex tasks, SLMs are closing the gap thanks to:

- Instruction tuning

- Fine-tuning specific tasks

- RAG (Retrieval-Augmented Generation) to add external context

- Low-Rank Adaptation (LoRA) to specialise models affordably

In many narrow tasks, SLMs perform just as well, if not better.

Top SLMs in 2025 to Watch

| Model | Parameters | Publisher | Notable Strengths |

|---|---|---|---|

| Phi-3 Mini | 3.8B | Microsoft | Speed + Accuracy |

| Gemma | 2B | Great performance, open | |

| TinyLlama | 1.1B | Ollama community. | Open Extreme efficiency |

| Mistral 7B | 7.3B | Mistral AI | Strong reasoning |

| OpenChat 3.5 | 7B | OpenChat | Chat-based tasks |

How to Get Started With SLMs

You don’t need GPUs or cloud APIs. Here’s how to try them:

- ✅ Hugging Face Transformers

- ✅ Ollama – Run models locally in 1 command

- ✅ LM Studio – No-code interface

- ✅ Google Colab – Try quantised SLMs in the browser

The Future of SLMs

The rise of SLMs signals a shift toward fit-for-purpose AI models tailored to specific needs rather than one-size-fits-all solutions.

As edge devices become more powerful (thanks to advances like Moore’s Law), SLMs will continue to grow in capability and adoption.

Posts on X highlight this trend, noting that SLMs are 20–30 times cheaper for targeted tasks and can outperform LLMs in specialised settings with proper fine-tuning.

What if tomorrow’s AI lived offline—and worked for everyone?

Expect to see:

- Retrieval-Augmented Generation (RAG): SLMs paired with on-device databases will enable personalised AI applications, such as analysing personal documents without requiring cloud access.

- Localised SLMs trained in Swahili, Yoruba, Hausa, Zulu.

- Wider Adoption: From healthcare to IoT, SLMs will democratize AI, making it accessible to smaller organisations and regions with limited infrastructure.

- Multimodal SLMs: Models like Google’s Gemma 3n, which handle text, images, and audio, will expand the capabilities of Edge AI.

- Solar-powered edge devices running innovative models offline

SLM is AI for the people, not just the cloud.

SLMs vs. LLMs: Which Should You Choose?

Choosing between SLMs and LLMs depends on your needs:

Choose LLMs if:

- You need a general-purpose model for complex tasks, such as advanced reasoning or generating broad content.

- You have access to significant computational resources and a budget.

- Your application doesn’t require real-time processing or offline functionality.

Choose SLMs if:

- You’re deploying AI on edge devices with limited resources.

- Privacy, low latency, or offline capabilities are critical.

- You need a cost-effective, customisable solution for specific tasks.

For many businesses, SLMs offer a practical way to integrate AI without the overhead of LLMs, especially in Edge AI scenarios.

Conclusion

Small Language Models are ushering in a new era of accessible, scalable AI.

For beginners, the key takeaway is straightforward: SLMs are enabling AI to be faster, cheaper, and more private, especially on edge devices. Whether you’re a business leader, developer, or curious user, the rise of SLMs means AI is becoming more accessible than ever before.

For Africa and the rest of the global south, they represent a rare opportunity — the ability to leapfrog into the AI age without expensive infrastructure.

Smaller doesn’t mean weaker. It means smarter, leaner, and ready for the real world.

🧪 Want to try it yourself? Stay tuned — we’re releasing a tutorial on building your own SLM-powered AI chatbot next week.